The ExtendSim Academic Research Grant program has helped many, many students over the years achieve their dreams. At the same time, it has supported essential phases of innovative research projects in universities worldwide.

The ExtendSim Academic Research Grant program has helped many, many students over the years achieve their dreams. At the same time, it has supported essential phases of innovative research projects in universities worldwide.

Here's just a few projects that have utilized ExtendSim as part of the Academic Research Grant program and helped students with their advanced degrees.

see Academic Research Grants in Progress![]()

apply for an Academic Research Grant ![]()

update form for current Academic Research Grant holders ![]()

Completed Academic Research Grants

Biofuel Supply Chain

Simulation-Based Approach for the Optimization of a Biofuel Supply Chain

Simulation-Based Approach for the Optimization of a Biofuel Supply Chain

Hernan Chavez Paura Garcia

University of Texas at San Antonio

PhD in Supply Chain Optimization • February 23, 2017

Project presented at

The 2017 IISE Annual Conference.

The 2017 IISE Annual Conference.

Abstract

The billion-ton study lead by the Oak Ridge National Laboratory indicates that the U.S. can sustainably produce over a billion ton of biomass, annually. However, the delivery of the biomass required to meet the required goals is particularly challenging. This is mainly because of the physical properties of biomass. This research work focuses on the use of agricultural residues to produce second-generation biofuels. Second generation biomass exhibits more quality variability (e.g., higher ash and moisture contents) than first generation. The purpose of this study is to quantify the cost of imperfect feedstock quality in a biomass-to-biorefinery supply chain (SC) and to develop a discrete event simulation coupled with an optimization algorithm for designing a biofuel SC's. This work presents a novel optimization approach based on an extended Integrated Biomass Supply and Logistics (IBSAL) simulation model for estimating the collection, storage, and transportation costs. The presented extension of the IBSAL considers the cost incurred for having imperfect feedstock quality and finds the optimal SC design. The applicability of this methodology is illustrated by using a case study in Ontario, Canada. A converging set of non-dominated solutions is obtained from computational experiments. Sensitivity analysis is performed to evaluate the impact of different scenarios on overall costs. Preliminary results are presented.

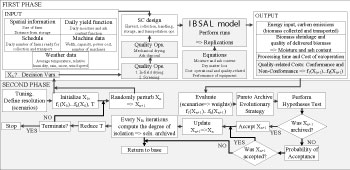

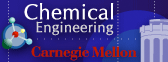

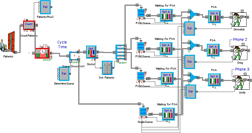

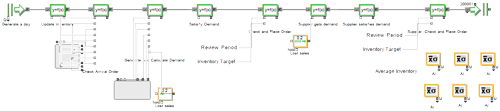

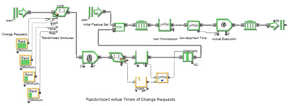

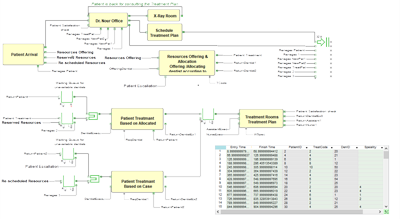

IBSAL-SimMOpt Approach

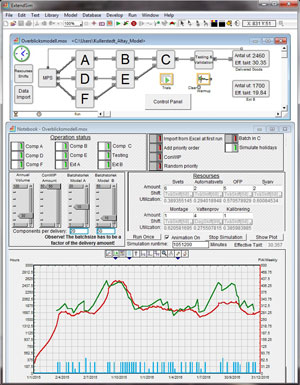

The approach presented in this work is a two-phase model that uses an extension of the IBSAL model in the initial phase, and searches a near-optimal set of solutions in the second phase by using an optimization procedure based on the SimMOpt model. The IBSAL model is a time-dependent discrete event simulation (DES) model with activity-based costing. The model in the proposed approach estimates the cost of imperfect feedstock quality and evaluates its effect on the performance of the SC.

The SimMOpt model is a simulation-based multi-objective optimization approach based on stochastic Simulated Annealing (SA).

The solutions for near-optimal quality-related costs are found by using the extension of the IBSAL implemented in ExtendSim and the SimMOpt-based procedure included in written in MS VBA®™ language. This figure is a diagram of the proposed approach.

Case Study

The characteristics of the system described in this research include a geographical implementation (i.e., Southern and Western Ontario, Canada), crop availability (ac), corn yield (bu/ac), sustainable production (dry tonne/ac), among others. The geographical implementation corresponds to 20 farms located in the following counties: Lambton, Chatam-Kent, Middlesex, and Huron.

The initial moisture content is modeled by ~U(0.6, 0.8). The moisture content after the natural drying process is assumed to follow ~U(0.15, 0.3). The moisture content requirement for thermochemical processes is 20%. If the content remains above 20%, the stover goes through a mechanical drying process. The cost of the natural air drying system is given by (0.014 * (Initial Moisture Content – Final Moisture Content) * 100) + 0.05 per bushel and the in-bin, stirred system is given by (0.033 * (Initial Moisture Content) * 100) + 0.048 per bushel. Similarly, the initial ash content follows ~U(0.08,0.12). The ash content after the screening process is assumed to follow ~U(0.1, Initial Ash Content). The screening cost is given by 135(Initial Ash Content – Final Ash Content) per dry tonne. The cost of disposing ash is given by 28.86(Final Ash Content) per dry tonne. The binary decision variables in this model represent the decisions of performing the field drying and the screening activities at each farm.

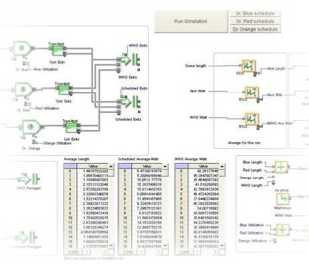

Results and Conclusions

The SA was tuned through designed computational experiments. The SA schedules have a relevant effect on the set of these preliminary solutions. Figure 3 shows the Pareto front including four schedules. The non-dominated solution that balances out the conformance and nonconformance costs (i.e., (0.4/0.6) and (0.6/0.4) weights) shows a conformance cost (the cost incurred to prevent biomass poor quality) of $49,899.47 and a non-conformance cost (cost incurred to fixed biomass poor quality) of $16,496.83 for all 20 farms. This non-dominated solution was found when using the schedule that computes the largest number of initial solutions (i.e., Schedule 4 → 50 initial solutions). This preliminary results highlighted the trade-off between conformance and conformance activities implemented into a biofuel SC. The impact of quality-related activities in the SC topology, design and planning decisions will be studied next.

Acknowledgements

This material is based upon work supported by the U.S. Department of Energy, Office of Energy Efficiency and Renewable Energy, Bioenergy Technologies Office (4000142556) and U.S. Department of Agriculture/National Institute of Food and Agriculture (2015-38422-24064). The fellowship from the Mexican Council for Science and Technology (CONACYT) is gratefully acknowledged. The support provided by Imagine That!®™ by donating of a full version of ExtendSim®™ through the ExtendSim Research Grant is gratefully acknowledged. The research work on the IBSAL model by Sokhansanj, Turhollow, Ebadian and Webb was relevant for the development of the proposed approach.

Previous Publications and Refereed Conference Proceedings

Aboytes, M., Chavez, H., Krishnaiyer, K., Stankus, S. & Taherkhorsandi, M. "Improving Radio Frequency Identification Accuracy in a Warehouse Setting". Abstract accepted in the 2016 Engineering Lean & Six Sigma Conference, San Antonio, TX, September 14 - 16, 2016.

Chávez, H. & Castillo-Villar, K. K. "Stochastic Multi-Objective Simulated Annealing for the Optimization of Machining Parameters". Abstract accepted in the 2016 Industrial and Systems Engineering Research Conference (ISERC), Anaheim, California, May 21 - 24, 2016.

2016 ISERC Best Track Paper: Manufacturing and Engineering Design.

Chávez, H., Castillo-Villar, K. K., Herrera, L., & Bustos, A. "Simulation-based multi-objective model for supply chains with disruptions in transportation". Robotics and Computer-Integrated Manufacturing.. Ms. Ref. No.: RCIM-D15-00130. 2016.

Stankus, S., Chávez, H., Castillo-Villar, K. K., & Feng, Y. "A Simulation-based Optimization Approach to Modeling a Fast-track Emergency Department". Abstract accepted in the 2015 Industrial and Systems Engineering Research Conference (ISERC), Nashville, Tennessee, May 30 - June 2, 2015.

Chavez, H. & Castillo-Villar K.K. "A Preliminary Simulated Annealing for Resilience Supply Chains". Paper on proceedings of the IEEE Symposium Series on Computational Intelligence 2014, Orlando, Florida, USA, December 12, 2014.

Chavez, Hernan, Castillo-Villar, K. K., Herrera, Luis & Bustos, A. "Simulation-based Optimization Model for Supply Chains with Disruptions in Transportation". Paper submitted on the Flexible Automation and Intelligent Manufacturing (FAIM) Conference 2014, San Antonio, TX, May 20-23. 2014.

Scientific Posters

Chavez, H., Webb, E., Castillo-Villar, K.K., Ebadian, M., & Sokhansanj, S. "Modeling Cost of Quality in a Discrete Event Biomass Supply Chain". IBSS (Southern Partnership for Integrated Biomass Supply Systems) Annual Meeting. July 27th, 2016.

Chavez, H., Webb, E., Castillo-Villar, K.K., Ebadian, M., & Sokhansanj, S. "Modeling Cost of Quality in a Discrete Event Biomass Supply Chain". ORISE (Oak Ridge Institute for Science and Education) Summer Graduate, Post Graduate, Employee Participant, and Faculty Poster Session. August 9th, 2016.

Technical Reports

Castillo-Villar, K. K., Rogers, Dwain & Chavez, Hernan. "AFV's Fleet Replacement Optimization (Alternative Transportation Initiatives)". (City Public Services (CPS) Energy (San Antonio Water System (SAWS)) & Austin Public Transit (Capital Metro):). Summer 2015. Granting Agency: CPS through Texas Sustainable Energy Research Institute. 2015.

Castillo-Villar, K.K., & Chávez, H. "Simulation-based Optimization Model for Supply Chains with Disruptions in Transportation", Mexico Center Educational Research Fellowship - International Study Fund, 2013-2014, Funded by Mexico Center, UTSA. 2014.

Castillo-Villar, K.K., & Chávez, H. "Reliability Project in Toyota Manufacturing from Reactive to Proactive Maintenance, San Antonio, TX". The University of Texas at San Antonio, Technical Report, June 2014, 68 and 21 pages. Granting Agency: Toyota Motor Manufacturing Texas. 2014.

Biomass to Commodity Chemicals

Simulation-Based Optimization of Biomass Utilization to Energy and Commodity Chemicals

Simulation-Based Optimization of Biomass Utilization to Energy and Commodity Chemicals

Ismail Fahmi

University of Tulsa

PhD in Chemical Engineering • August 2013

Project presented at

12AIChE Conference

12AIChE Conference

Pittsburgh, PA

November 1, 2012

oral presentation

oral presentation

poster presentation

poster presentation

Project published in

Chemical Engineering Research and Design

Chemical Engineering Research and Design

Special Issue: Computer Aided Process Engineering (CAPE) Tools for a Sustainable World

Volume 91, Issue 8 •August 2013

Abstract

Incorporating non-traditional feedstocks, e.g., biomass, to chemical process industry (CPI) will require investments in research & development (R&D) and capacity expansions. The impact of these investments on the evolution of biomass to commodity chemicals (BTCC) system should be studied to ensure a cost-effective transition with acceptable risk levels. The BTCC system includes both exogenous, e.g., product demands (decision-independent) and endogenous, e.g., the change in technology cost with investment levels (decision-dependent) uncertainties.

This paper presents a prototype simulation-based optimization (SIMOPT) approach to study the BTCC system evolution under exogenous and endogenous uncertainties, and provides a preliminary analysis of the impact of using three different sampling methods, i.e., Monte Carlo, Latin Hypercube, and Halton sequence, to generate the simulation runs on the computational cost of the SIMOPT approach. We realized that the simulation-based optimization framework that we developed has two major computational costs:

- The cost associated with the required number of samples to obtain the model with sufficient statistical significance.

- The cost associated with solving the optimization problem.

The results of a simplified case study suggest that annual demand increases is the dominant factor for the total cost of the BTCC system. The results also suggest that using Halton sequence as the sampling method yields the smallest number of samples, i.e., the least computational cost, to achieve a statistically significant solution. Other conclusions that we can take from this work are:

- BTCC system evolution is highly dependent on the costs of the raw material.

- Investing in the non-renewable processing technologies is attractive in long term.

- The developed SIMOPT framework can be used to include the endogenous and exogenous uncertainties of the BTCC system evolution.

- The developed optimization module is universal, meaning that it is independent of technology types.

- The developed optimization module can also incorporate the relationships between maturity stage and the ability to incorporate to overall production.

- Halton series is shown to be the most appropriate sampling method.

- Bilinear relaxation with linearly segmented tight relaxation coupled with nonlinear terms relaxation with linear upper and under estimators is shown to be able to obtain a good initialization for the optimization module.

Other Publications by this Researcher

Fahmi, Ismail and Selen Cremaschi. "A Prototype Simulation-based Optimization Approach to Model Feedstock Development for Chemical Industry". Proceedings of the 22nd European Symposium on Computer Aided Process Engineering, 2012.

Fahmi, Ismail and Selen Cremaschi. "Stage-gate Representation of Feedstock Development for Chemical Process Industry". Foundations of Computer-Aided Process Operations, 2012.

Call Centers • Routing Rules

Knowledge Management in Call Centers: How Routing Rules Influence Expertise in the Presence of On-the-Job Learning

Knowledge Management in Call Centers: How Routing Rules Influence Expertise in the Presence of On-the-Job Learning

Geoffrey Ryder

University of California at Santa Cruz

PhD in Operations Research • March 16, 2011

Abstract

In this paper, the effect that routing rules have on agent learning is researched. A nonlinear optimization framework for two kinds of expertise objectives are developed: one that seeks equal distribution of experience across the workforce (effectively cross-training) and one that aims to develop specialized expertise by prioritizing the routing of specific customer inquiries to specific agents. Analytical models of call center operations are inadequate to handle this task, so instead we turn to discrete-event simulation, and evaluate the effect of routing policies on agent expertise with a custom simulator developed in the ExtendSim modeling environment. Simulation results describe an efficient frontier in routing policies that depends on the underlying expertise objective function.

Other Publications by this Researcher

Ryder, G. "Managing Changing Service Capacity Based on Agent Performance Data". INFORMS Annual Meeting, Service Industry III Session, Nov. 7, 2007.

Ryder, G. "How Learning and Forgetting Affect the Optimal Work Policy." INFORMS Annual Meeting, Management of Complex Service Systems Session, Nov. 4, 2007.

Ryder, G. and Ross, K. "Optimal Service Rules in the Presence of Learning and Forgetting". Sixteenth Annual Frontiers in Service Conference, San Francisco. October 4, 2007.

Ryder, G., Ross, K., and Musacchio, J. "Optimal service policies under learning effects". International Journal of Services and Operations Management, Issue 6, Vol. 4, 2008.

Ryder, G. and Ross, K. "Optimal service policies in the presence of learning and forgetting". Applied Probability Track, INFORMS Annual Conference, Pittsburg, PA, 2006.

Ryder, G. "A probability collectives approach to weighted clustering algorithms for ad hoc networks". IASTED CCN Conference, Marina Del Rey, CA, October 2005.

Chemical • Processing Multi-Products

An Efficient Method for Optimal Design of Large-Scale Integrated Chemical Production Sites with Endogenous Uncertainty

An Efficient Method for Optimal Design of Large-Scale Integrated Chemical Production Sites with Endogenous Uncertainty

Sebastian Terrazas-Morenoa, Ignacio E. Grossmanna, John M. Wassick, Scott J. Bury, Naoko Akiya

Carnegie Mellon University

PhD in Chemical Engineering • March 2012

Project published in

Computers & Chemical Engineering

Volume 37 • February 2012

Abstract

Integrated sites are tightly interconnected networks of large-scale chemical processes. Given the large-scale network structure of these sites, disruptions in any of its nodes, or individual chemical processes, can propagate and disrupt the operation of the whole network. Random process failures that reduce or shut down production capacity are among the most common disruptions. The impact of such disruptive events can be mitigated by adding parallel units and/or intermediate storage. In this paper, the design of large-scale, integrated sites considering random process failures in addressed. In a previous work (Terrazas-Moreno et al., 2010), a novel mixed-integer linear programming (MILP) model was proposed to maximize the average production capacity of an integrated site while minimizing the required capital investment. The present work deals with the solution of large-scale problem instances for which a strategy is proposed that consists of two elements. On one hand, we use Benders decomposition to overcome the combinatorial complexity of the MILP model. On the other hand, we exploit discrete-rate simulation tools to obtain a relevant reduced sample of failure scenarios or states. We first illustrate this strategy in a small example. Next, we address an industrial case study where we use a detailed simulation model to assess the quality of the design obtained from the MILP model.

"A Mixed-Integer Linear Programming Model for Optimizing the Scheduling and Assignment of Tank Farm operations"

Sebastian Terrazas-Morenoa, Ignacio E. Grossmanna, John M. Wassick

Carnegie Mellon University

Abstract

This paper presents a novel mixed-integer linear programming (MILP) formulation for the Tank Farm Operation Problem (TFOP), which involves simultaneous scheduling of continuous multi-product processing lines and the assignment of dedicated storage tanks to finished products. The objective of the problem is to minimize blocking of the finished lines by obtaining an optimal schedule and an optimal allocation of storage resources. The novelty of this work is the integration of a tank assignment problem with a scheduling problem where a dedicated storage tank has to be chosen from a tank farm given the volumes, sequencing, and timing of production of a series of products. The scheduling part of the model is based on the Multi-operation Sequencing (MOS) model by Mouret et al., (2011). The formulation is tested in three examples of different size and complexity.

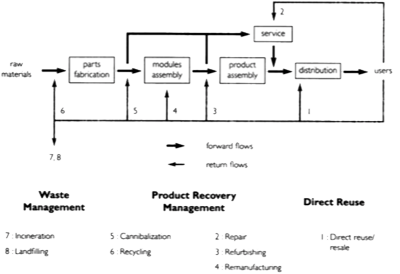

Closed Loop Supply Chains • Metamodeling of Deteriorating Reusable Articles

Metamodeling of Deteriorating Reusable Articles in a Closed Loop Supply Chain

Metamodeling of Deteriorating Reusable Articles in a Closed Loop Supply Chain

Eoin Glennane

Dublin City University

Masters in Renewable Energy • June 2021

Abstract

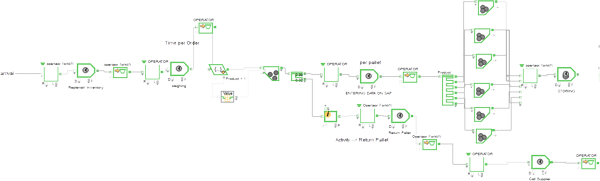

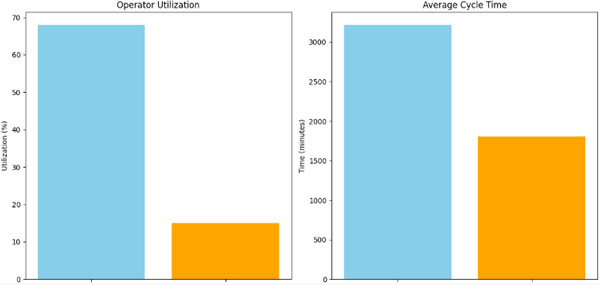

This paper presents a closed loop supply chain for a reusable, deteriorating tool. The tool is used in a manufacturing process on an item in a linear supply chain. A model is created for the linear item supply chain and the tools closed loop supply chain to analyse the interactions between them and various input parameters so that output responses of the system can be modelled. Three approaches are taken to model the system, a brute force factorial design, a modified version of a Latin hypercube space filling design, and a fast flexible space filling design. It is found that all three methods can describe responses that require only a few inputs well but cannot accurately predict more complex responses without all of the relevant factors. Space filling designs should be used if more factors are needed as they minimise the total amount of simulations needed to produce an accurate model.

Approach

Using ExtendSim, models were developed to replicate a manufacturing process requiring reusable tools of varying quality. The models are modifiable to examine how changing certain parameters in the inventory control side of the model have an impact on the production side of the model to determine optimal inventory control practices.

Results and Conclusions

All three models developed for this project, Design Expert, RLHC, and FFFD, accurately predict the response with the least amount of input: High Quality Time between Orders. However, the more complex responses of the system are not accurately captured in any of the three models. One of the reasons for this may be the lack of relevant factors analysed by the simulation. Another reason could be that there is too much unaccounted randomness in the system for the models to accurately predict the more complex performance indicators. The use of space filling designs such as RLHC and FFFD perform well with high numbers of factors and should be explored further. The Design Expert model performs well but the number of simulations required increases exponentially with the number of factors present.

All three models developed for this project, Design Expert, RLHC, and FFFD, accurately predict the response with the least amount of input: High Quality Time between Orders. However, the more complex responses of the system are not accurately captured in any of the three models. One of the reasons for this may be the lack of relevant factors analysed by the simulation. Another reason could be that there is too much unaccounted randomness in the system for the models to accurately predict the more complex performance indicators. The use of space filling designs such as RLHC and FFFD perform well with high numbers of factors and should be explored further. The Design Expert model performs well but the number of simulations required increases exponentially with the number of factors present.

Only two types of space filling designs for metamodel creation were explored in this paper, a modified version of the Latin Hypercube and JMPs Fast Flexible Filing Design. Both methods are one-shot, non-sequential designs so sequential methods[12] may be able to create accurate models with even lower numbers of simulations required.

Closed Loop Supply Chains • Pull Type Production Control Strategies

Performance Analysis and Development of Pull-Type Production Control Strategies for Evolutionary Optimisation of Closed-Loop Supply Chains

Performance Analysis and Development of Pull-Type Production Control Strategies for Evolutionary Optimisation of Closed-Loop Supply Chains

Jonathan Ebner

Dublin City University

PhD in Manufacturing Engineering • January 2018

Abstract

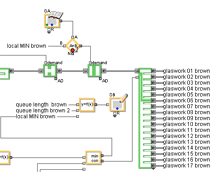

The objective of this thesis is to establish a Closed-Loop Supply Chain (CLSC) design that is analysed through a series of simulation models, aimed at defining the highest performing production control strategy, whilst considering multiple related variables on both the forward and reverse flow of materials in manufacturing environments. Due to its stochastic nature, the reverse logistics side of the CLSC represents an increased source of variance for the inventory management and control strategies as it implies the erratic supply of returned materials, in addition to the very random customer demand, hence with highly variable inputs on both sides of the productive system, intrinsically inherent to this line of research.

To test the operational performance of several pull-type production control strategies, a simulation-based research method was designed. The strategies experimented were: Hybrid Extended Kanban CONWIP special case (HEKC-II), Hybrid Kanban CONWIP (HKC), Dynamic Allocation Hybrid Extended Kanban CONWIP special case (DNC HEKC-II) and Dynamic Allocation Hybrid Kanban CONWIP (DNC HKC). All were tested in scenarios with high and low processing time variability and with 90% returned products and 40% returns from an open market system, therefore totaling 16 simulation models. Multi-objective evolutionary algorithms were utilised to generate the Pareto-optimum performance frontier with the objective of simultaneously minimising both performance metrics: The overall average work in progress (WIP) and the average backlog queue length (BL) for the entire CLSC. Processes used in the recovery and recycling of end of life manufactured goods were examined. This research method structures leading factors towards improved economic viability and sustainability of technologies required for the effective implementation of inventory control strategies on highly complex closed-loop supply chains with the focus on the performance metrics and optimum utilisation of resources available for the industry.

To test the operational performance of several pull-type production control strategies, a simulation-based research method was designed. The strategies experimented were: Hybrid Extended Kanban CONWIP special case (HEKC-II), Hybrid Kanban CONWIP (HKC), Dynamic Allocation Hybrid Extended Kanban CONWIP special case (DNC HEKC-II) and Dynamic Allocation Hybrid Kanban CONWIP (DNC HKC). All were tested in scenarios with high and low processing time variability and with 90% returned products and 40% returns from an open market system, therefore totaling 16 simulation models. Multi-objective evolutionary algorithms were utilised to generate the Pareto-optimum performance frontier with the objective of simultaneously minimising both performance metrics: The overall average work in progress (WIP) and the average backlog queue length (BL) for the entire CLSC. Processes used in the recovery and recycling of end of life manufactured goods were examined. This research method structures leading factors towards improved economic viability and sustainability of technologies required for the effective implementation of inventory control strategies on highly complex closed-loop supply chains with the focus on the performance metrics and optimum utilisation of resources available for the industry.

The dynamic allocation strategies proved significant performance improvement, shifting the entire Pareto frontier forward with major advances on both metrics. Furthermore, it happened on all scenarios tested. The modified HEKC-II, with an optimisable parameter that enables it to be overwritten in a way that it can match the well-established HKC, also performed as originally intended and had better results than HKC in some cases, especially with the higher variability level. It also provided grounds for the suggested improvements and flexibilisation of the HEKC strategy.

A major contribution of this thesis was the successful implementation of another advanced control methodology, entitled here the Intelligent Self-Designing Production Control Strategy, which provided maximum control performance. It consisted essentially of DNC HEKC-II with the following modifications: I) Extensive increase of dynamically allocated authorisation cards; II) Further anticipation of the time to trigger the change in the number of cards according to the finished goods buffer level, plus an acceleration/deceleration factor of this change; III) The capability of downsizing itself to become similar to HKC in an optimisation process if diverse production system conditions and variability would require. It displayed a very significant shift of the performance frontier.

Approach

ExtendSim models were created to treat sets of data the author gathered from mathematical models output, from statistically filtered data, histograms and statistics provided from peer reviewed articles, business cases and related recent publications. Then, it was compiled in a intelligible fashion to produce visual scenarios where companies or public entities can see the advantages of CLSC over the long run.

Results and Conclusions

The dynamic allocation policies, particularly the Dynamic Hybrid Extended Kanban CONWIP special case (DNC HEKC-II), throughout the research space in consideration, evidenced very superior production control performance overall.

The proposed factors on the modification approach, namely: I) The Master Pool (MP) to decide the quantifiable level of the authorisation cards dynamic change and, II) The additional demand communication (DE) to provide slower production pace during low demand periods and to avoid the distributed demand starvation. Both provided a measurable development on the inventory management and operations efficiency for the tested strategies implemented in a Closed-Loop Supply Chain environment.

Significant performance improvement was achieved through the multi-objective optimisation, which directly correlates to the technological viability of the CLSCs strength to cope with a high degree of variabilities simultaneously derived from the processing times, supply and demand. This contribution also provided grounds for the architecture of the Intelligent Self-Designing production control strategy.

The dynamic allocation strategies with results had very tight constraints with regards to the allowable range of PACs available. They had less cards available and they would only have had an equal range of cards after the dynamic change triggered it, so it was even more restricted in order to keep the validity for the comparative analysis against the control group HKC.

Scenarios with 40% recycled supply had less observable improvements because of the dynamic change being operational less often and due to the lower variability of the reverse logistics.

The HEKC-II, modified from the work of Dallery and Liberopoulos [4], had better results than HKC by Bonvik et al. [3] in scenarios with higher variability of supply with 90% returned material. It matched the control group HKC results with a lower variability of supply. It also provided grounds for the suggested improvements and flexibilisation of the HEKC Control Strategy.

Construction • Production Variability

Work-In-Process Buffer Design Methodology for Scheduling Repetitive Building Projects

Work-In-Process Buffer Design Methodology for Scheduling Repetitive Building Projects

Vicente González, Luis Fernando Alarcón, and Pedro Gazmuri

Pontificia Universidad de Católica de Chile

PhD in Construction • July 2008

Abstract

Variability in production is one of the largest factors that negatively impacts construction project performance. A common construction practice to protect production systems from variability is the use of buffers (Bf). Construction practitioners and researchers have proposed buffering approaches for different production situations, but these approaches have faced practical limitations in their application.

Variability in production is one of the largest factors that negatively impacts construction project performance. A common construction practice to protect production systems from variability is the use of buffers (Bf). Construction practitioners and researchers have proposed buffering approaches for different production situations, but these approaches have faced practical limitations in their application.

In Multiobjective Design of Work-In-Process Buffer for Scheduling Repetitive Building Projects, a multiobjective analytic model (MAM) is proposed to develop a graphical solution for the design of Work-In-Process (WIP) Bf in order to overcome these practical limitations to Bf application, being demonstrated through the scheduling of repetitive building projects. Multiobjective analytic modeling is based on Simulation–Optimization (SO) modeling and Pareto Fronts concepts. Simulation–Optimization framework uses Evolutionary Strategies (ES) as the optimization search approach, which allows for the design of optimum WIP Bf sizes by optimizing different project objectives (e.g., project cost, time and productivity). The framework is tested and validated on two repetitive building projects. The SO framework is then generalized through Pareto Front concepts, allowing for the development of the MAM as nomographs for practical use. The application advantages of the MAM are shown through a project scheduling example. Results demonstrate project performance improvements and a more efficient and practical design of WIP Bf. Additionally, production strategies based on WIP Bf and lean production principles in construction are discussed.

Other Publications by this Researcher

González, V., Alarcón, L.F. and Gazmuri, P. "Design of Work In Process Buffers in Repetitive Projects: A Case Study". 14th International Conference for Lean Construction, Santiago, Chile, July 2006.

González, V. and Alarcón, L.F. "Design and Management of WIP Buffers in Repetitive Projects" (White Paper), 2005.

González, V., Rischmoller, L. and Alarcón, L.F. "Management of Buffers in Repetitive Projects: Using Production Management Theory and IT Tools". PhD Summer School, 12th International Conference for Lean Construction, Helsinore, Denmark, August 2004.

González, V., Rischmoller, L. and Alarcón, L.F. "Design of Buffers in Repetitive Projects: Using Production Management Theory and IT Tools". 4th International Postgraduate Research Conference, University of Salford, Manchester, U.K., April 2004.

González, V. and Alarcón, L.F. "Buffer de Programación: Schedule Buffers: A Complementary Strategy to Reduce the Variability in the Processes of Construction", Revista Ingeniería de Construcción Pontificia Universidad Católica de Chile, Volume 18 . Nº 2, pp 109 - 119, Mayo - Agosto 2003.

Cross-Docking • Part I

CDAP Simulation Report

CDAP Simulation Report

Zongze Chen

University of Pennsylvania

Masters in Electrical & Systems Engineering • May 13, 2010

Abstract

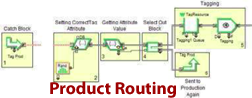

In this project we collaborated with National Retail Systems (NRS) who provided us with realistic cross-dock situations and data to study and to evaluate our modeling and optimization result. The NRS's North Bergen facility receives goods from multiple vendors, sorts and loads them onto outbound trailer trucks for a number of retail stores. Using ExtendSim, I developed a simulation to model of two cross-docks in operation of their New Jersey facility. There were several main objectives to be achieved:

- Develop the model of cross-dock process. For simplicity, we started the development with a 4×4 cross-dock. Later, this would be expanded to realistic dimensions.

- Use the data generated from the GQ3AP algorithm we developed previously to simulate the process and analyze the total cost under the situation and discuss the optimization.

- Improve our optimization models to take into account the impact of truck arrival and departure times. Determine how one can improve cross-docking operations and what costs could be reduced through improved operational control.

The final report describes how simulation helps ensure success of cross-docking systems by determining optimal routing costs. Modeling methods and issues are also discussed as they apply to cross-docking. This report includes discussion of the actual processes employed by NRS, description of our models, simulation results and comparisons, and our conclusions.

Approach

The initial phase of the project involved data collection and acquisition including OD freight volumes and scheduling times. In the next phase in cooperation with NRS, we developed a discrete cross-dock simulation model and found the best optimization methods. Finally, we incorporated what we learned into a set of suggested optimization procedures.

Other Reports by this Researcher

Course Reports in OPIM 910 course (introduction to optimization theory):

Personalized Diet Plans Optimization

University of Pennsylvania Evacuation Plan Design

Classroom Scheduling

Course Reports in STAT 541 course (Statistics Methods):

Boston Real Estate Analysis

Course Reports in STAT 520 course (Applied Econometrics):

Salary Weight Analysis by Gender

Research Assistant on Project at Management Department of Wharton:

Entrepreneurship and Strategy

Cross-Docking • Part II

Anticipating Labor and Processing Needs of Cross-Dock Operations

Anticipating Labor and Processing Needs of Cross-Dock Operations

Frederick Abiprabowo

University of Pennsylvania

Operations Research • December 2012

Project presented and awarded at

During the INFORMS Annual Conference 2013, the design team of Frederick Abiprabowo, Napat Harinsuit, Samuel Lim, and Willis Zhang, all from the University of Pennsylvania, were awarded the Undergraduate Operations Research Prize. This competition is held each year to honor a student or group of students who conducted a significant applied project in operations research or management science, and/or original and important theoretical or applied research in operations research or management science, while enrolled as an undergraduate student.

During the INFORMS Annual Conference 2013, the design team of Frederick Abiprabowo, Napat Harinsuit, Samuel Lim, and Willis Zhang, all from the University of Pennsylvania, were awarded the Undergraduate Operations Research Prize. This competition is held each year to honor a student or group of students who conducted a significant applied project in operations research or management science, and/or original and important theoretical or applied research in operations research or management science, while enrolled as an undergraduate student.

Their paper "Designing a Simulation Tool for Commercial Cross-Docking Application" uses ExtendSim to dynamically replicate the operations of a large cross docking facility. The prototype model serves as a tool that enables cross-dock operators to evaluate assignment strategies in a risk-free, costless environment.

Abstract

In the supply chain literature, there are generally three kinds of studies regarding cross-docking:

- Fundamentals of cross-dock

- Distribution planning

- Operations inside the facility

Studies of cross-dock fundamentals take a high-level perspective and discuss the issues of cross-docking in relations to a company’s distribution process, management, etc. Cross-docking can also be viewed as a method in the management of a supply chain. Distribution planning problems pertain to scheduling of trucks, vehicle routing, and network navigation. Finally, there are many research papers regarding the operations inside cross-docks.

Zongze Chen’s CDAP simulation report dealt with the operations inside the cross-docking facility and discussed the formulation of goods movement inside a cross-dock as a GQ3AP. Then, a simulation model was designed after one of the cross-docking facilities of a retail company in New Jersey. This model was then tested to compare the cost of goods movement in two different scenarios

- GQ3AP-optimized door assignment

- Manual door assignment

Based on the results of this test, the scenario with optimized door assignment did yield a lower cost than the one without.

This report continues where the previous report left off and addressed its proposed future plans for the model.

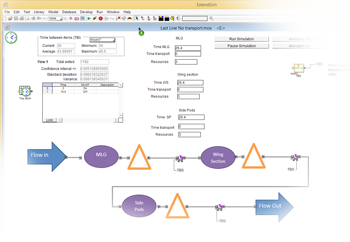

Crowd Management • Modelling Mass Crowd Using Discrete Event Simulation

A Case Study of Integrated Tawaf and Sayee Rituals during Hajj

A Case Study of Integrated Tawaf and Sayee Rituals during Hajj

Almoaid Abdulrahman A. Owaidah

University of Western Australia

PhD in Philosophy • October 2022

Supervised by: Doina Olaru, Mohammed Bennamoun, Ferdous Sohel, and R. Nazim Khan

Abstract

Mass Gathering (MG) events bring people together at a specific time and place, which, if not properly managed, could result in crowd incidents (such as injuries), the quick spread of infectious diseases, and other adverse consequences for participants. Hajj is one of the largest MG events, where up to 4 million people can gather at the same location at the same time every year. In the Hajj, pilgrims from all over the world gather in Makkah city in Saudi Arabia for five specific days to perform rituals at different sites.

Mass Gathering (MG) events bring people together at a specific time and place, which, if not properly managed, could result in crowd incidents (such as injuries), the quick spread of infectious diseases, and other adverse consequences for participants. Hajj is one of the largest MG events, where up to 4 million people can gather at the same location at the same time every year. In the Hajj, pilgrims from all over the world gather in Makkah city in Saudi Arabia for five specific days to perform rituals at different sites.

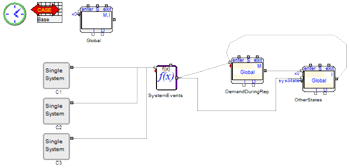

The facilities at the ritual places can become congested and occasionally overcrowded when there are a lot of pilgrims. Overcrowding at Hajj events makes crowd control and management difficult, resulting in many accidents, casualties, and traffic jams. This thesis investigates new simulation and modelling-based crowd management strategies in order to overcome the overcrowding problem at pilgrimage sites and ensure safe and efficient transport of pilgrims. ExtendSim is used to test and validate all simulation models on real Hajj data from previous years, in normal and emergency situations, at different ritual sites, and the impact of arrival scheduling and pilgrim movement between the holy sites. Hajj is a mass gathering event that takes place annually in Makkah, Saudi Arabia. Typically, around three million people participate in the event and perform rituals that involve their movements within strict space and time restrictions. Despite efforts by the Hajj organisers, such massive crowd gathering and movement cause overcrowding related problems at the Hajj sites. Several previous simulation studies on Hajj focused on the rituals individually. Tawaf, followed by Sayee, are two important rituals that are performed by all the pilgrims at the same venue on the same day. These events have a strong potential for crowd buildup and related problems. As opposed the previous works in the literature, in this paper we study these two events jointly, rather than separately integrating the Tawaf and Sayee rituals into one model. The validated model was applied to a wide range of scenarios where different percentages of pilgrims were allocated to the various Tawaf and Sayee areas. The effect of such allocations on the time to complete Tawaf and Sayee indicate strategies for managing these two key Hajj rituals.

Approach

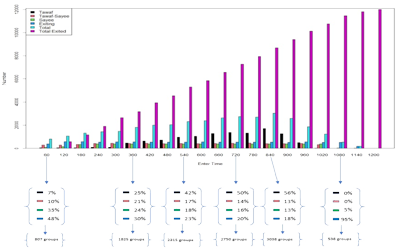

Total number of groups of pilgrims at areas of Tawaf, Sayee, in transit between Tawaf-Sayee and the cumulative number of groups that had exited the GM, over time.Most of the work undertaken before examined in great detail interaction between pilgrims during Tawaf (at the Grand Mosque) and Stoning the Devil (at the Aljamarat Bridge), which represent the most crowded events of the Hajj. Given the confined space and weather conditions, often incidents occur, with tragic consequences. Instead of using an agent-based model to simulate interactions between individuals, I opted for modelling the whole sequence of Hajj at the level of a guided group (up to 250 individuals with a local guide trained specifically for the event). The innovation is in integrating the events and incorporating travel between sites, which has not been undertaken before.

Total number of groups of pilgrims at areas of Tawaf, Sayee, in transit between Tawaf-Sayee and the cumulative number of groups that had exited the GM, over time.Most of the work undertaken before examined in great detail interaction between pilgrims during Tawaf (at the Grand Mosque) and Stoning the Devil (at the Aljamarat Bridge), which represent the most crowded events of the Hajj. Given the confined space and weather conditions, often incidents occur, with tragic consequences. Instead of using an agent-based model to simulate interactions between individuals, I opted for modelling the whole sequence of Hajj at the level of a guided group (up to 250 individuals with a local guide trained specifically for the event). The innovation is in integrating the events and incorporating travel between sites, which has not been undertaken before.

The research will have implications for both academia and practice: understanding the most challenging aspects of Hajj and whether/how scheduling the arrivals of the pilgrims at various sites may alleviate crowding and improve the operation of the whole event provides insights on organising other mass gathering events. It is expected that transport may play a key role in improving the flow, but this has not been tested. On the managerial side, higher performance indicators mean a better experience for the pilgrims, ensuring safe events and without incidents. The results may assist planning of future events, understanding capacity limitations, providing training tips for the local guides on aspects that may cause issues during the event.

Results and Conclusions

Hajj is the largest mass gathering event in the world. Mitigation of overcrowding at Hajj sites is one of the daunting tasks faced by Hajj officials. Prior planning to organise the movements of the pilgrims, especially for the Tawaf and Sayee rituals, is not only recommended for better use of resources, but also to avoid deleterious effects of emergency situations. While previous studies presented different approaches of Tawaf or Sayee management separately, an important contribution of this work is an integrated Tawaf and Sayee model with various scenarios, using ExtendSim as a research method. Various scenarios were developed to offer different managerial options. The scenarios considered different aspects in pilgrim management at entrances and exits of the Grand Mosque, and Tawaf and Sayee rituals.

Without a micro-level description of physical structures and of individual entities or pilgrims, this study offers insights into the optimal allocations of pilgrims to control crowd density in the GM, in the most strained areas in terms of capacity, and on the conditions of evacuation (LoS, flow capacities, times to traverse or cross the GM towards exit gates). In addition, it shows that by scheduling the two rituals and an adequate allocation to the various levels of the Grand Mosque, there is potential for accommodating larger numbers of pilgrims within the same time window of 30 hours. For example, the average duration required for a number of four million pilgrims to complete both Tawaf and Sayee is 1,783 min (assuming current conditions and no critical incidents), which suggests reserves of capacity could be created through planning. Although our target was to simulate 3 million pilgrims, using the same conditions, the model could simulate up to 4 million pilgrims within the allotted 30 hours.

The simulation results confirm that the most critically high crowd density areas (yet preferred by pilgrims) are the Tawaf area (Mataf) and Sayee Ground Level (GL). As indicated, when these areas accommodated 30% to 80% of pilgrims, all pilgrims completed the rituals in a timely fashion. On the contrary, when other Tawaf and Sayee areas were assigned high percentages of pilgrims, the scenarios showed delays and inability to accommodate all pilgrim groups to complete the rituals within 30 hours. Therefore, it is recommended to distribute the pilgrims gradually, starting from the Tawaf and Sayee critical areas (Tawaf area and Sayee GL) followed by other levels. Another managerial method that could be considered in the organisation of the event is the allocation of each pilgrim group to a certain gate for entry and exit.

download screenshots of ExtendSim model

download screenshots of ExtendSim model

Research site

Almoaid A. Owaidah on Google Scholar.

Other Publications by this Research

A. Owaidah, D. Olaru, M. Bennamoun, F. Sohel and R. Nazim Khan, "Modelling Mass Crowd Using Discrete Event Simulation: A Case Study of Integrated Tawaf and Sayee Rituals during Hajj", in IEEE Access, doi: 10.1109/ACCESS.2021.3083265.

Digital Twin • Reliability of an Assembly Plant

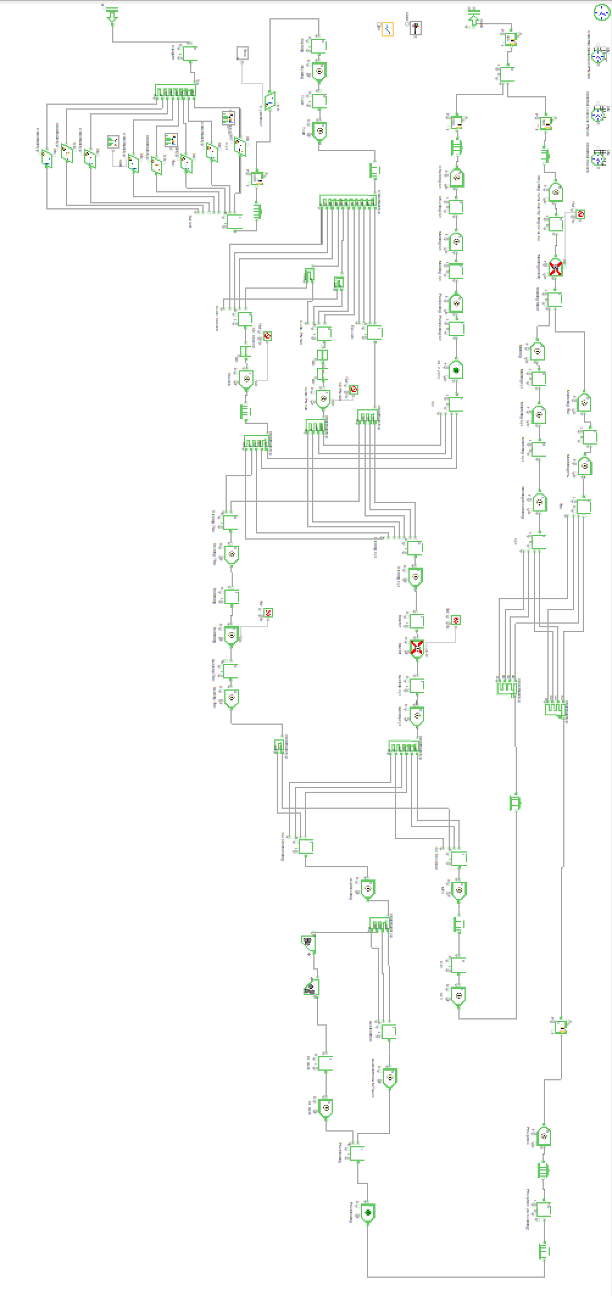

Application of Discrete Event Simulation for Assembly Process Optimization • Buffer and Takt Time Management

Application of Discrete Event Simulation for Assembly Process Optimization • Buffer and Takt Time Management

Pontus Persson & Tim Snell

Luleå Tekniska Universitet

Masters in Mechanical Engineering • May 2020

Abstract

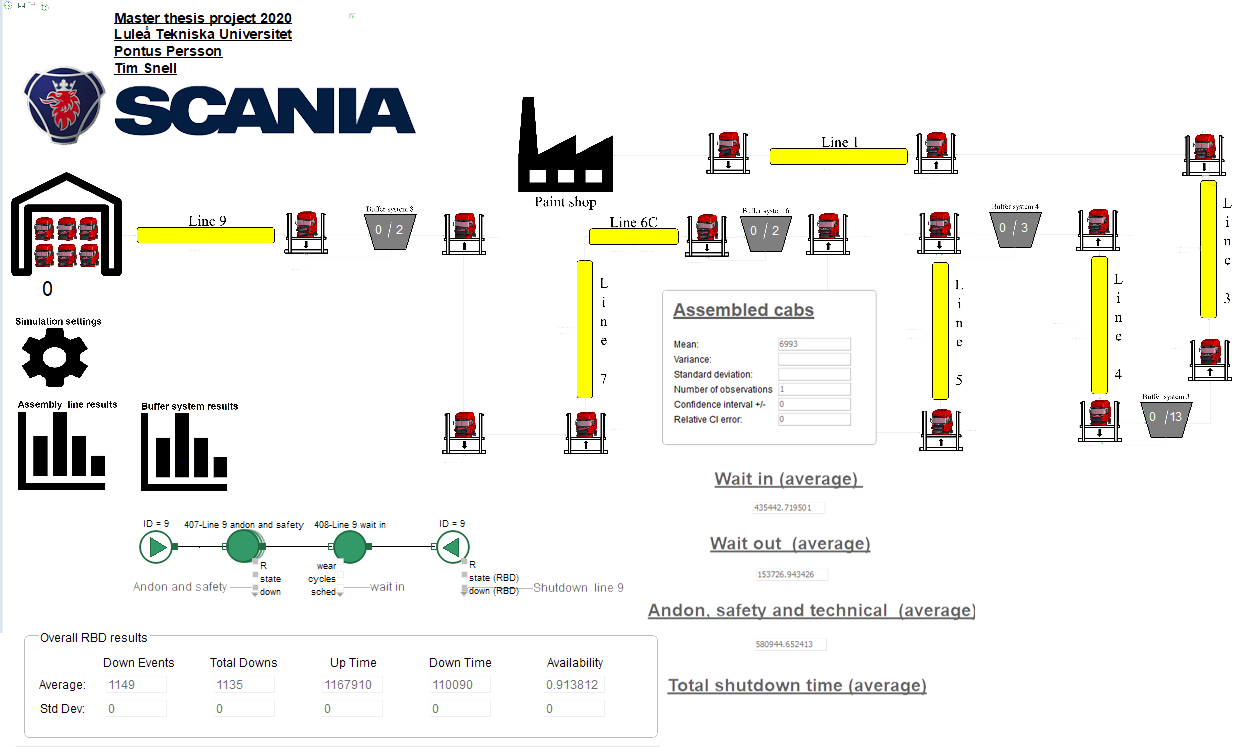

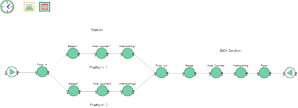

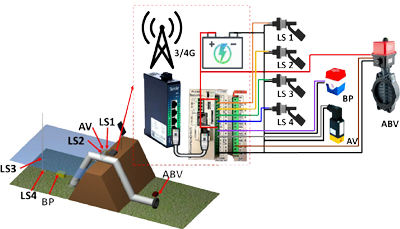

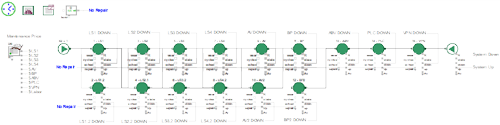

A master thesis within mechanical engineering performed by two students has been conducted at Scania in Oskarshamn. The purpose has been to investigate if Discrete Event Simulation using ExtendSim can be applied to increase Scania's assembly productivity. The objective was to investigate how buffer systems could be managed by varying the amount of buffers and their transport speed. Assembly line takt times with regard of their availability was also investigated. The method of approach was to build a simulation model to gain valid decision making information regarding these aspects. Process stop data was extracted and imported to ExtendSim where the Reliability library was used to generate shutdowns.

A master thesis within mechanical engineering performed by two students has been conducted at Scania in Oskarshamn. The purpose has been to investigate if Discrete Event Simulation using ExtendSim can be applied to increase Scania's assembly productivity. The objective was to investigate how buffer systems could be managed by varying the amount of buffers and their transport speed. Assembly line takt times with regard of their availability was also investigated. The method of approach was to build a simulation model to gain valid decision making information regarding these aspects. Process stop data was extracted and imported to ExtendSim where the Reliability library was used to generate shutdowns.

Comparing 24 sets over 100 runs to each other a median standard deviation of 0,91 % was achieved. Comparing the total amount of assembled cabs over a time period of five weeks with the real time data a difference of 4,77 % was achieved. A difference of 1,85 % in total amount of shutdown time was also achieved for the same conditions.

The biggest effect of varying buffer spaces was for system 6A. An increasement of up to 20 more assembled cabs over a time period of five weeks could then be achieved. By increasing all the buffer transport speeds by 40 %, up to 20 more assembled cabs over a time period of five weeks could be achieved. A push and pull system was also investigated where the push generated the best results. A 22 hour decreasement of total shutdown time and an increasement of 113 more assembled cabs over a time period of five weeks could be achieved.

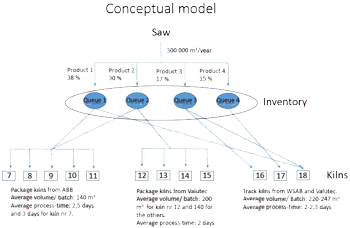

Approach

The plan was to first build a digital twin of an assembly production. Once the digital twin was built and validated, different scenarios could be evaluated to answer questions such as:

- What is the most optimal state of the buffer system?

- How can TTR and TBF of assembly stations be optimized and what are the affects?

To accomplish this, researchers built a process map, a conceptual model, and a digital twin. After gathering process data they could evaluate different scenarios.

Stoppage times was used to define TTR and TBF which was essential for creating a valid model. Therefore, the ExtendSim Reliability library was an essential part of this project.

Results and Conclusions

- The simulation model generates viable decision making information, therefore fulfills its purpose.

- The simulation model produces results with a median standard deviation of 0,91 % for 24 sets over 100 runs with the same settings.

- The total amount of assembled cabs differ by 4,77 % between the simulation model and weekly statistical reports.

- The total shutdown time differ by 1,85 % between the simulation model and Power BI.

- The simulation model is accurate for the current assembly process and can be used within Scania in the near future.

- By increasing the amount of spaces for buffer system 6A an increasement of assembled cabs up to 20 is achieved for a time period of five weeks. A positive trend by increasing the amount of spaces for buffer system 1 and 3 can be seen.

- By increasing all the buffer transport speeds by 40 %, up to 20 more cabs are assembled over a time period of five weeks.

- A push production system lowers the total shutdown time by 22 hours over a time period of five weeks compared with the current state.

- A push production system increases the amount of assembled cabs by 113 over a time period of five weeks compared to the current state.

Education • Optimizing Performance of Educational Processes

Higher Education Management in Relation to Process Organization Theory

Higher Education Management in Relation to Process Organization Theory

Maja Cukusic

University of Split

PhD in Business Informatics • March 2011

Project published as

"Online self-assessment and students' success in higher education institutions"

Maja Ćukušić, Željko Garača, Mario Jadrić

Computers & Education - An International Journal

March 2014 • ISSN 0360-1315

Abstract

This paper validates effects of online self-assessment tests as a formative assessment strategy in one of the first year undergraduate courses. Achieved students' results such as test scores and pass rates are compared for three different generations for the same course but also judged against the exam results of other courses taught in the same semester. The analysis points out that there is a statistically significant difference between the groups for half-semester tests and exam pass rates after online self-assessment tests were introduced. Positive effects on students' success are approximated for the overall institution using a simulation model. Results point out that a small increase in pass rates could significantly impact the overall success i.e. decrease of dropout rates.

This paper validates effects of online self-assessment tests as a formative assessment strategy in one of the first year undergraduate courses. Achieved students' results such as test scores and pass rates are compared for three different generations for the same course but also judged against the exam results of other courses taught in the same semester. The analysis points out that there is a statistically significant difference between the groups for half-semester tests and exam pass rates after online self-assessment tests were introduced. Positive effects on students' success are approximated for the overall institution using a simulation model. Results point out that a small increase in pass rates could significantly impact the overall success i.e. decrease of dropout rates.

The research model that preceded the simulation explored the correlation between ICT support of educational processes and their outcomes. In order to test the impact of ICT support a sub-process was chosen: extensive self-evaluation quizzes delivered via e-learning system were designed, implemented and monitored within a first-year university course. The results were controlled and measured with regards to students’ outcomes achieved during the previous and current academic year in several courses (horizontal and vertical control of the results). Given the correlations between variables that characterize support of the educational process and outcomes on tests and exams, ICT support of the educational process has a positive effect as expressed in terms of relevant performance indicators.

A simulation model was developed which allows extrapolation of the impact on key performance indicators (i.e. drop-out rate and study completion time) for the whole institution enabling analysis of potential opportunities. The model adheres to study regulations of the Faculty of Economics (University of Split) and simulates outcomes for a generation of undergraduate students. Simulated results were compared with the actual data from the information system to verify the correctness of the model.

Not all course environments allow implementation of self-evaluation quizzes that result in slightly better exam pass-rate (roughly about 3%). Consequently, the simulation experiment investigates the process change for only half of the courses and only for the largest group of students (60%). As a result, the percentage of students who drop out from their studies could be significantly lower, 36% compared to 45.67% in real-life. For the entire system, this relatively small per-course improvement in exam results has a strong overall effect.

download paper (in English)

download paper (in English)

download paper (in Croatian)

download paper (in Croatian)

Other Publications by this Researcher

For a complete list of publications by Maja Ćukušić, please go to http://bib.irb.hr/lista-radova?autor=300571.

Feedstock Supply Chain for Biofuels and Biochemicals

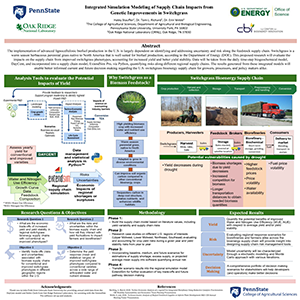

Integrated Simulation Modeling for Supply Chain Impacts of Genetic Improvements in Switchgrass

Integrated Simulation Modeling for Supply Chain Impacts of Genetic Improvements in Switchgrass

Haley Stauffer

Pennsylvania State University

Masters of Science in Agricultural and Biological Engineering • July 2020

Abstract

The implementation of advanced lignocellulosic biofuel production in the U.S. is largely dependent on identifying and addressing uncertainty and risk along the feedstock supply chain. Switchgrass is a warm season herbaceous perennial grass native to North America that is well suited for biofuel production, according to the Department of Energy (DOE). This proposed research will evaluate the impacts on the supply chain from improved switchgrass phenotypes, accounting for increased yield and better yield stability. Data will be taken from the daily time-step biogeochemical model, DayCent, and incorporated into a supply chain model, ExtendSim Pro, via Python, quantifying risks along different regional supply chains. The results generated from these integrated models will enable better informed current and future decision making regarding the U.S. switchgrass bioenergy supply chain for growers, processors, and policy makers alike.

The implementation of advanced lignocellulosic biofuel production in the U.S. is largely dependent on identifying and addressing uncertainty and risk along the feedstock supply chain. Switchgrass is a warm season herbaceous perennial grass native to North America that is well suited for biofuel production, according to the Department of Energy (DOE). This proposed research will evaluate the impacts on the supply chain from improved switchgrass phenotypes, accounting for increased yield and better yield stability. Data will be taken from the daily time-step biogeochemical model, DayCent, and incorporated into a supply chain model, ExtendSim Pro, via Python, quantifying risks along different regional supply chains. The results generated from these integrated models will enable better informed current and future decision making regarding the U.S. switchgrass bioenergy supply chain for growers, processors, and policy makers alike.

Approach

The research strategy involved utilizing the existing framework for supply chain modeling of poplar conducted by Oak Ridge National Laboratory (ORNL) and implementing the mass and energy metrics with respect to switchgrass. Mass and energy inputs were gathered for the ExtendSim model to determine the cost points in which a certain switchgrass phenotype would prove more advantageous environmentally (yield, yield stability, drought resistance) and economically. Selected equipment was incorporatedat different stages along with their presumed quantity for the regional field case studies (Tennessee, Pennsylvania, and Iowa). Costs for this equipment as well as energy usage in addition to sorting through the supply shortage or supply surplus scenarios as they relate to costs were also modeled.

Results and Conclusions

Ms. Stauffer was awarded first place in the poster competition at the Northeast Agricultural and Biological Engineering Conference (NABEC) for her work on this project.

Ms. Stauffer was awarded first place in the poster competition at the Northeast Agricultural and Biological Engineering Conference (NABEC) for her work on this project.

ORNL will be utilizing this data to further their efforts in industrial scale research of bioenergy production from switchgrass. This thesis provides an academic addition to the existing literature to better improve further knowledge of switchgrass supply chain needs and management.

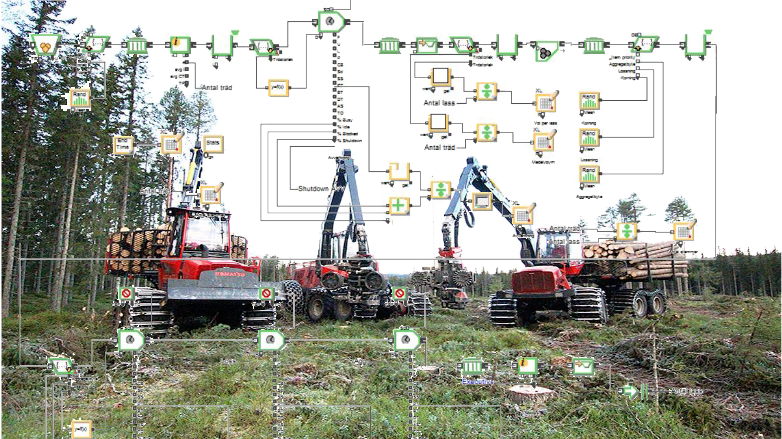

Forest Machine Systems

Comparison Between a Two-Machine System and Komatsu X19 Harwarder

Comparison Between a Two-Machine System and Komatsu X19 Harwarder

Petter Berggren & Philip Öhrman

Swedish University of Agricultural Sciences

Bachelor in Forest Technology • September 2017

Abstract

Today, commercial forestry almost exclusively uses a two-machine system where the harvester fells, twigs, cuts, and sorts the wood on the ground. It is then picked up by a forwarder which transports the wood from the forest to the road. The system is proven and has been developed for decades. Earlier attempts for efficiency have beeen made with another type of system where a harwarder manages the entire process, primarily at thinning. Komatsu has developed a new harwarder (X19) that focuses on final fellings and this machine is the basis for this study. The work has been to model and run simulations in ExtendSim with time studies of the two competing systems. The aim of the simulation is to find out if, when and in which cases, how well the harwarder can compete with the current two-machine system.

Today, commercial forestry almost exclusively uses a two-machine system where the harvester fells, twigs, cuts, and sorts the wood on the ground. It is then picked up by a forwarder which transports the wood from the forest to the road. The system is proven and has been developed for decades. Earlier attempts for efficiency have beeen made with another type of system where a harwarder manages the entire process, primarily at thinning. Komatsu has developed a new harwarder (X19) that focuses on final fellings and this machine is the basis for this study. The work has been to model and run simulations in ExtendSim with time studies of the two competing systems. The aim of the simulation is to find out if, when and in which cases, how well the harwarder can compete with the current two-machine system.

Approach

Two different forest machine systems deliver roundwood to roadside. The traditional system consists of two machines: a harvester (felling trees) and a forwarder (hauling trees to roadside). The single-machine system consists of a harwarder, doing both felling and hauling. Our research compares the productivity and economy between the two systems.

The goal was to update old results from research in this area with new knowledge using data from modern machines, taking advantage of the opportunities for discrete-event simulation modelling in ExtendSim.

Results and Conclusions

Simulation results show that the harwarder has significantly lower process costs during small projects with short terrain transport distances. This is mainly due to the transport costs of the machines and that the harwarder reduces time during loading while cutting the logs, unlike the two-machine system, directly to the cargo carrier.

Other Publications by this Researcher

B. Talbot , T. Nordfjell & K. Suadicani. "Assessing the Utility of Two Integrated Harvester-Forwarder Machine Concepts Through Stand-Level Simulation". International Journal of Forest Engineering (2003), 14:2, 31-43

ExtendSim had been used in a Doctoral thesis at SLU: Eriksson, Anders. "Improving the Efficiency of Forest Fuel Supply Chains". Swedish University of Agricultural Sciences. Department of Energy and Technology. (2016).

Healthcare • Emergency Department Crowding

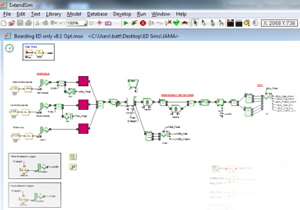

The Financial Consequences of Lost Demand and Reducing Boarding in Hospital Emergency Departments

The Financial Consequences of Lost Demand and Reducing Boarding in Hospital Emergency Departments

Bob Batt

University of Pennsylvania • Wharton School

PhD in Operations Management • April 2011

Project published in and presented at

Results of Mr. Batt's project was published in the Annals of Emergency Medicine in October 2011. The American College of Emergency Physicians thought the findings were so significant that they issued a press release about the paper. The main find was that reducing emergency department boarding by one hour could generate approximately $2.7M per year if dynamic admitting policies are used to control elective patient arrivals.

Results of Mr. Batt's project was published in the Annals of Emergency Medicine in October 2011. The American College of Emergency Physicians thought the findings were so significant that they issued a press release about the paper. The main find was that reducing emergency department boarding by one hour could generate approximately $2.7M per year if dynamic admitting policies are used to control elective patient arrivals.  This is a novel finding in that no previous work has put together the revenue gains from the ED of reducing boarding with the potential revenue reductions from reducing elective patients. Mr. Batt presented this paper at the Production & Operations Management Society conference Conference in Reno, Nevada on April 29, 2011.

This is a novel finding in that no previous work has put together the revenue gains from the ED of reducing boarding with the potential revenue reductions from reducing elective patients. Mr. Batt presented this paper at the Production & Operations Management Society conference Conference in Reno, Nevada on April 29, 2011.

Abstract

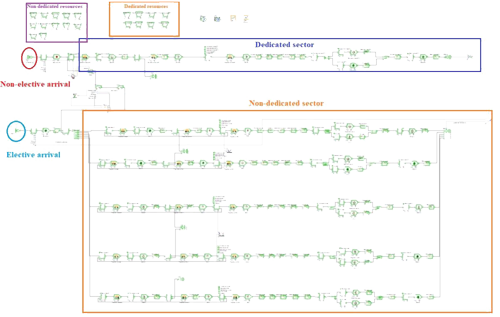

This project explores the operational ramifications of crowding in hospital emergency departments. A common indicator of crowding is patients “boarding” in the emergency department while awaiting transfer to an inpatient bed in the hospital. Boarding is a controversial topic in the medical community because it has been suggested that it is a way to tacitly prioritize high-dollar elective patients over lower-value emergency patients. However, the financial impact of boarding is not obvious since boarding creates congestion in the emergency department leading to higher levels of lost demand from patients leaving without treatment and ambulances being diverted. We use discrete event simulation to model a hospital under various boarding regimes and patient prioritization schemes. We find that reducing boarding can be not only operationally efficient but also financially beneficial for the hospital.This project explores the operational ramifications of crowding in hospital emergency departments. A common indicator of crowding is patients “boarding” in the emergency department while awaiting transfer to an inpatient bed in the hospital. Boarding is a controversial topic in the medical community because it has been suggested that it is a way to tacitly prioritize high-dollar elective patients over lower-value emergency patients. However, the financial impact of boarding is not obvious since boarding creates congestion in the emergency department leading to higher levels of lost demand from patients leaving without treatment and ambulances being diverted. We use discrete event simulation to model a hospital under various boarding regimes and patient prioritization schemes. We find that reducing boarding can be not only operationally efficient but also financially beneficial for the hospital.

This project explores the operational ramifications of crowding in hospital emergency departments. A common indicator of crowding is patients “boarding” in the emergency department while awaiting transfer to an inpatient bed in the hospital. Boarding is a controversial topic in the medical community because it has been suggested that it is a way to tacitly prioritize high-dollar elective patients over lower-value emergency patients. However, the financial impact of boarding is not obvious since boarding creates congestion in the emergency department leading to higher levels of lost demand from patients leaving without treatment and ambulances being diverted. We use discrete event simulation to model a hospital under various boarding regimes and patient prioritization schemes. We find that reducing boarding can be not only operationally efficient but also financially beneficial for the hospital.This project explores the operational ramifications of crowding in hospital emergency departments. A common indicator of crowding is patients “boarding” in the emergency department while awaiting transfer to an inpatient bed in the hospital. Boarding is a controversial topic in the medical community because it has been suggested that it is a way to tacitly prioritize high-dollar elective patients over lower-value emergency patients. However, the financial impact of boarding is not obvious since boarding creates congestion in the emergency department leading to higher levels of lost demand from patients leaving without treatment and ambulances being diverted. We use discrete event simulation to model a hospital under various boarding regimes and patient prioritization schemes. We find that reducing boarding can be not only operationally efficient but also financially beneficial for the hospital.

Healthcare • Hospital Prep for a Mass Casualty Event

Assessing Hospital System Resilience to Events Involving Physical Damage and Demand Surge

Assessing Hospital System Resilience to Events Involving Physical Damage and Demand Surge

Bahar Shahverdi, Mersedeh Tariverdi, Elise Miller-Hooks

George Mason University • Department of Civil, Environmental, and Infrastructure Engineering

PhD in Transportation • July 2019

PhD Dissertation Published In

Socio-Economic Planning Sciences

Socio-Economic Planning Sciences

July 25, 2019

Highlights:

- Investigates potential benefits of hospital coalitions in a disaster.

- Assesses the potential value of patient transfers and resource sharing.

- Considers joint capacity enhancement alternatives.

- Discrete event simulation conceptualization of hospital system.

- Quantifies hospital system resilience to pandemic, MCI, and disaster events with damage.

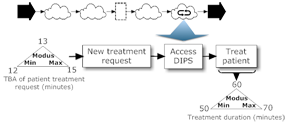

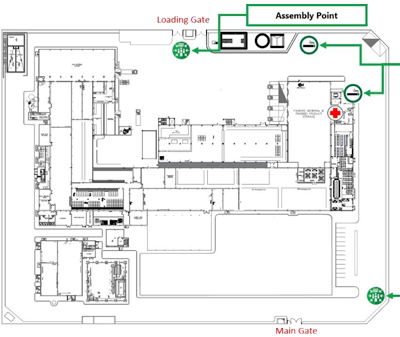

Abstract

This paper investigates the effectiveness of formalized collaboration strategies through which patients can be transferred and resources, including staff, equipment and supplies, can be shared across hospitals in response to a disaster incident involving mass casualties and area-wide damage. Inflicted damage can affect hospital infrastructure and its supporting lifelines, thus impacting capacity and capability or, ultimately, services that are provided. Using a discrete event simulation framework and underlying open queuing network conceptualization involving patient flows through 9 critical units of each hospital, impacts on critical resources, physical spaces and demand are modeled and the hospital system's resilience to these hazard events is evaluated.

This paper investigates the effectiveness of formalized collaboration strategies through which patients can be transferred and resources, including staff, equipment and supplies, can be shared across hospitals in response to a disaster incident involving mass casualties and area-wide damage. Inflicted damage can affect hospital infrastructure and its supporting lifelines, thus impacting capacity and capability or, ultimately, services that are provided. Using a discrete event simulation framework and underlying open queuing network conceptualization involving patient flows through 9 critical units of each hospital, impacts on critical resources, physical spaces and demand are modeled and the hospital system's resilience to these hazard events is evaluated.

Approach

ExtendSim was used to model individual hospitals as well as a small health care network. Initially, all possible patient routes in the hospitals (with different Trauma levels) were modeled first. Then, performance measures in different collaboration scenarios were analyzed.

Results and Conclusions

Findings from numerical experiments on a case study involving multiple hospitals spaced over a large metropolitan region replicating a system similar to the Johns Hopkins Hospital System show the potential of strategies involving not only transfers and resource sharing, but also joint capacity enhancement alternatives to improve post-disaster emergency health care service delivery through joint action.

Other Publications, Reports, & Projects from this Team

Rivinius, Jessica. "Engineered for Resilience" START, The National Consortium for the Study of Terrorism and Responses to Terrorism. July 25,2016.

TariVerdi M., Miller-Hooks E., Adan M. "Assignment Strategies for Real-Time Deployment of Disaster Responders" International Journal of Operations and Quantitative Management, Special Issue on Humanitarian Operations Management. January 2015.

Mollanejad M., Faturechi R., TariVerdi M., Kim M.. "A Generic Heuristic for Maximizing Inventory Slack in the Emergency Medication Distribution Problem". Transportation Research Board 93 Annual Meeting, Washington D.C. 2014.

Healthcare • Nanomedicine

Nanomedicine

Nanomedicine

Janet Cheung

University of Southern California

Non-profit research project • January 2015

Project details

As part of a completely non-profit research project organized by our advisor, we aimed to answer the question of how effective the methodology of using nanobots can be for curing cancer as compared to the existing cancer drug because nanobots could integrate the diagnosis and treatment of cancer in a cohesive, potentially non-invasive unit through precise, targeted operations on the cellular level.

The key problems of conventional technology are the methods of drug delivery and the concentration of the drug cocktail required to destroy the cancerous cells. So nanobots would potentially allow the drugs to be directed to exact location where cancerous cells have been observed. Thus, only the malignant tissues are affected, and healthy tissues are not. As a result, nanomedicine does have the potential to revolutionize the way medicine is practiced around the world, but it is clear that biocompatibility on the nanoscale is one of many major challenges that must be overcome.

The model created focuses on drug delivery via the bloodstream (which is the most common and preferred method of delivery). It has the skeletons for active and passive targeting nanobots. The nanobots simulate flowing through the bloodstream until it is attracted to the tumor. Additionally, the model accounts for various possible drug delivery failures (i.e. early deployment of the drug, power failure, and time constraints). For now, the model determines when the failures occur using various probability distributions as placeholders for more legitimate values. Upon failure, the representation of the nanobot exits the model. The number of each type of failure can be viewed in a bar graph. In the event of a successful nanobot to tumor attachment, the representative nanobot in the model incurs latency, which accounts for attaching to the tumor and actual drug deployment. The number of successful deliveries to the number of failed deliveries can be seen in another bar graph available.The model focuses on drug delivery via the bloodstream (which is the most common and preferred method of delivery). It has the skeletons for active and passive targeting nanobots. The nanobots simulate flowing through the bloodstream until it is attracted to the tumor. Additionally, the model accounts for various possible drug delivery failures (i.e. early deployment of the drug, power failure, and time constraints). For now, the model determines when the failures occur using various probability distributions as placeholders for more legitimate values. Upon failure, the representation of the nanobot exits the model. The number of each type of failure can be viewed in a bar graph. In the event of a successful nanobot to tumor attachment, the representative nanobot in the model incurs latency, which accounts for attaching to the tumor and actual drug deployment. The number of successful deliveries to the number of failed deliveries can be seen in another bar graph available.

The model created focuses on drug delivery via the bloodstream (which is the most common and preferred method of delivery). It has the skeletons for active and passive targeting nanobots. The nanobots simulate flowing through the bloodstream until it is attracted to the tumor. Additionally, the model accounts for various possible drug delivery failures (i.e. early deployment of the drug, power failure, and time constraints). For now, the model determines when the failures occur using various probability distributions as placeholders for more legitimate values. Upon failure, the representation of the nanobot exits the model. The number of each type of failure can be viewed in a bar graph. In the event of a successful nanobot to tumor attachment, the representative nanobot in the model incurs latency, which accounts for attaching to the tumor and actual drug deployment. The number of successful deliveries to the number of failed deliveries can be seen in another bar graph available.The model focuses on drug delivery via the bloodstream (which is the most common and preferred method of delivery). It has the skeletons for active and passive targeting nanobots. The nanobots simulate flowing through the bloodstream until it is attracted to the tumor. Additionally, the model accounts for various possible drug delivery failures (i.e. early deployment of the drug, power failure, and time constraints). For now, the model determines when the failures occur using various probability distributions as placeholders for more legitimate values. Upon failure, the representation of the nanobot exits the model. The number of each type of failure can be viewed in a bar graph. In the event of a successful nanobot to tumor attachment, the representative nanobot in the model incurs latency, which accounts for attaching to the tumor and actual drug deployment. The number of successful deliveries to the number of failed deliveries can be seen in another bar graph available.